Topics¶

- Nonparametric Estimation

- Glivenko-Cantelli Theorem

- Dvoretsky-Kiefer-Wolfowitz Inequality

- Plug-in Estimator

- Nonparametric Confidence Intervals via Bootstrap

- Nonparametric Hypothesis Testing

- Permutation Testing

- Permutation Testing with Shells Data

Inference and Estimation: The Big Picture¶

The Big Picture is about inference and estimation, and especially inference and estimation problems where computational techniques are helpful.

| Point estimation | Set estimation | Hypothesis Testing | |

|

Parametric

|

MLE of finitely many parameters |

Asymptotically Normal Confidence Intervals |

Wald Test from Confidence Interval |

|

Non-parametric |

about to see ... | about to see ... | about to see ... |

So far we have seen parametric models, for example

- $X_1, X_2, \ldots, X_n \overset{IID}{\sim} Bernoulli (\theta)$, $\theta \in [0,1]$

- $X_1, X_2, \ldots, X_n \overset{IID}{\sim} Exponential (\lambda)$, $\lambda \in (0,\infty)$

- $X_1, X_2, \ldots, X_n \overset{IID}{\sim} Normal(\mu^*, \sigma)$, $\mu \in \mathbb{R}$, $\sigma \in (0,\infty)$

In all these cases the parameter space (the space within which the parameter(s) can take values) is finite dimensional:

- for the $Bernoulli$, $\theta \in [0,1] \subseteq \mathbb{R}^1$

- for the $Exponential$, $\lambda \in (0, \infty) \subseteq \mathbb{R}^1$

- for the $Normal$, $\mu \in \mathbb{R}^1$, $\sigma \in (0,\infty) \subseteq \mathbb{R}^1$, so $(\mu, \sigma) \subseteq \mathbb{R}^2$

For parametric experiments, we can use the maximum likelihood principle and estimate the parameters using the Maximum Likelihood Estimator (MLE), for instance.

Non-parametric estimation¶

Suppose we don't know what the distribution function (DF) is? We are not trying to estimate some fixed but unknown parameter $\theta^*$ for some RV we are assuming to be $Bernoulli(\theta^*)$, we are trying to estimate the DF itself. In real life, data does not come neatly labeled "I am a realisation of a $Bernoulli$ RV", or "I am a realisation of an $Exponential$ RV": an important part of inference and estimation is to make inferences about the DF itself from our observations.

Observations from some unknown process¶

Consider the following non-parametric product experiment:

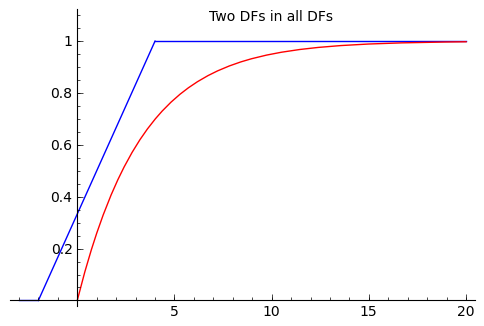

$$X_1, X_2, \ldots, X_n\ \overset{IID}{\sim} F^* \in \{\text{all DFs}\}$$We want to produce a point estimate for $F^*$, which is a allowed to be any DF ("lives in the set of all DFs"), i.e., $F^* \in \{\text{all DFs}\}$

Crucially, $\{\text{all DFs}\}$, i.e., the set of all distribution functions over $\mathbb{R}$ is infinite dimensional.

We have already seen an estimate, made using the data, of a distribution function: the empirical or data-based distribution function (or empirical cumulative distribution function). This can be formalized as the following process of adding indicator functions of the half-lines beginning at the data points $[X_1,+\infty),[X_2,+\infty),\ldots,[X_n,+\infty)$:

$$\widehat{F}_n (x) = \frac{1}{n} \sum_{i=1}^n \mathbf{1}_{[X_i,+\infty)}(x)$$where,

$$\mathbf{1}_{[X_i,+\infty)}(x) := \begin{cases} & 1 \quad \text{ if } X_i \leq x \\ & 0 \quad \text{ if }X_i > x \end{cases}$$