Remark: When mathematical models become too complicated to explain a complex phenomenon of interest (eg. traffic or supply-chain management, predictive maintenance of an expensive component in a national grid/system of power plant, etc.), you have little choice but to resort to modular arithmetic based simulations with pseudo-random numbers.

John von Neumann invented such a pseudo-random number generator called the middle-square method for solving complex problems in the Manhattan Project.

Finally, statistical inference methods you are about to see can be combined with simulation to conduct simulation-intensive statistical inference! These are called Monte Carlo methods and the naming has an interesting folk-tale from Los Alamos.

The starting point for Monte Carlo methods is modular arithmetic ...

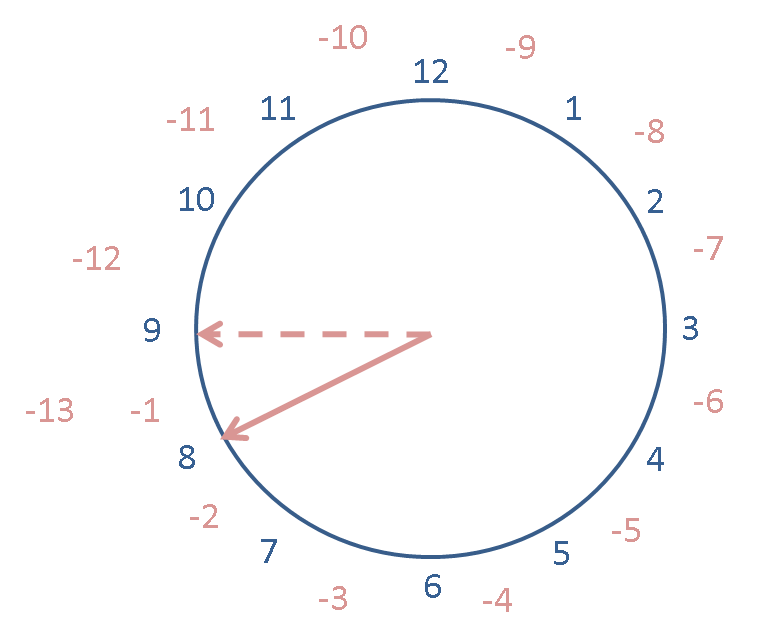

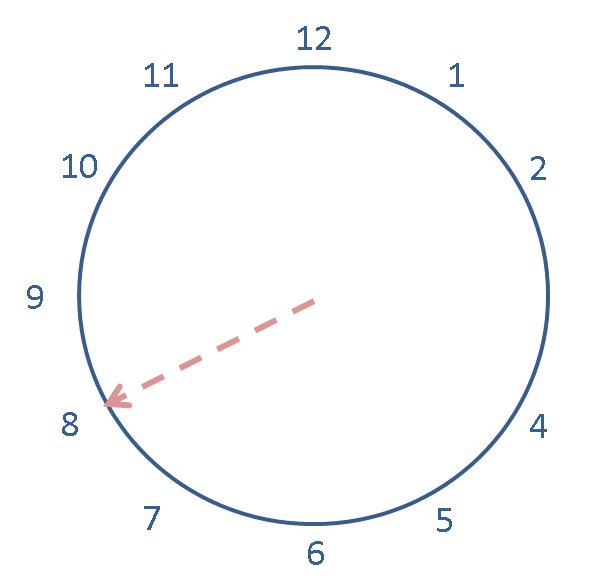

Modular arithmetic¶

Modular arithmetic (arithmetic modulo $m$) is a central theme in number theory and is crucial for generating random numbers from a computer (i.e., machine-implementation of probabilistic objects). Being able to do this is essential for computational statistical experiments and methods that help do this are called Monte Carlo methods. Such computer-generated random numbers are technically called pseudo-random numbers.

In this notebook we are going to learn to add and multiply modulo $m$ (this part of our notebook is adapted from William Stein's SageMath worksheet on Modular Arithmetic for the purposes of linear congruential generators). If you want a more thorough treatment see Modular Arithmetic as displayed below.